Add Custom Robots.Txt File Inward Blogger

Are yous 1 of modern twenty-four hours bloggers without much noesis of technical details, looking to heighten your blog's ratings in addition to audience but don't know how to in addition to your blogger friend told yous that yous tin larn a hike into your site visitors via editing your Robots.txt file? Or mayhap yous don't desire search engines spiders to crawl through your pages? Or yous create convey a technical background but don't desire to run a peril making changes without expert's words on topic? Well inwards either case, this is the right identify for yous to be. In this tutorial, yous volition run into how to add together Custom Robots.txt file inwards Blogger inwards a few slowly steps.

But earlier nosotros opened upwardly in addition to start working on Robots.txt, let's convey a brief overview of its significance:

Warning! Use amongst caution. Incorrect work of these features tin number inwards your weblog existence ignored yesteryear search engines.

User-agent:Media partners-Google:

Mediapartners-Google is Google's AdSense robot that would oft crawl your site looking for relevant ads to serve on your weblog or site. If yous disallow this option, they won't last able to run into whatsoever ads on your specified posts or pages. Similarly, if yous are non using Google AdSense ads on your site, only take both these lines.

User-agent: *

Those of yous amongst trivial programming sense must convey guessed the symbolic nature of grapheme '*' (wildcard). For others, it specifies that this part (and the lines beneath) is for all of yous incoming spiders, robots, in addition to crawlers.

Disallow: /search

Keyword Disallow, specifies the 'not to' create things for your blog. Add /search side yesteryear side to it, in addition to that way yous are guiding robots non to crawl the search pages /search results of your site. Therefore, a page number similar http://myblog.blogspot.com/search/label/mylabel volition never last crawled in addition to indexed.

Allow: /

Keyword Allow specifies 'to do' things for your blog. Adding '/' way that the robot may crawl your homepage.

Sitemap:

Keyword Sitemap refers to our blogs sitemap; the given code hither tells robots to index every novel post. By specifying it amongst a link, nosotros are optimizing it for efficient crawling for incoming guests, through which incoming robots volition detect path to our entire weblog posts links, ensuring none of our posted weblog posts volition last left out from the SEO perspective.

However yesteryear default, the robot will index solely 25 posts, thus if yous desire to increase the number of index files, thus supercede the sitemap link amongst this one:

1. Sign inwards to yous blogger describe concern human relationship in addition to click on your blog.

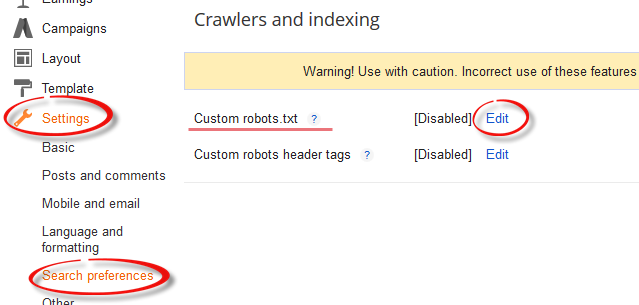

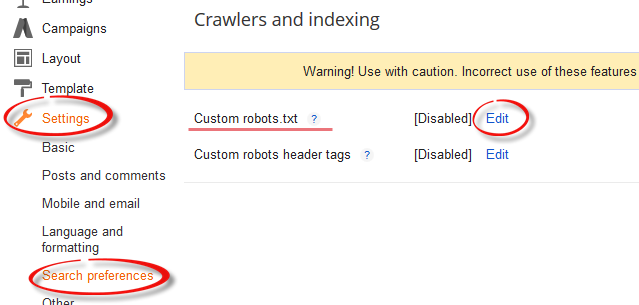

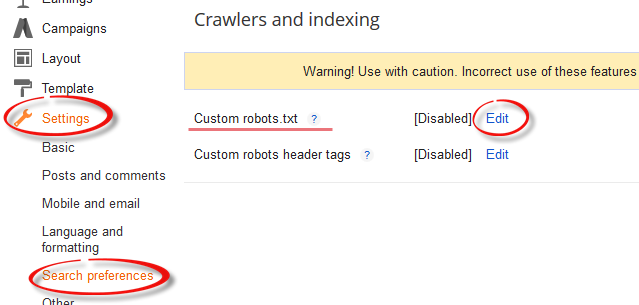

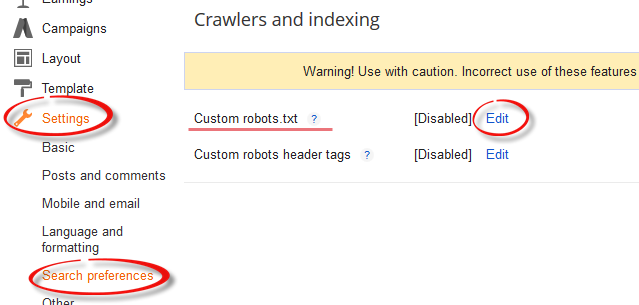

2. Go to Settings > Search Preferences > Crawlers in addition to indexing.

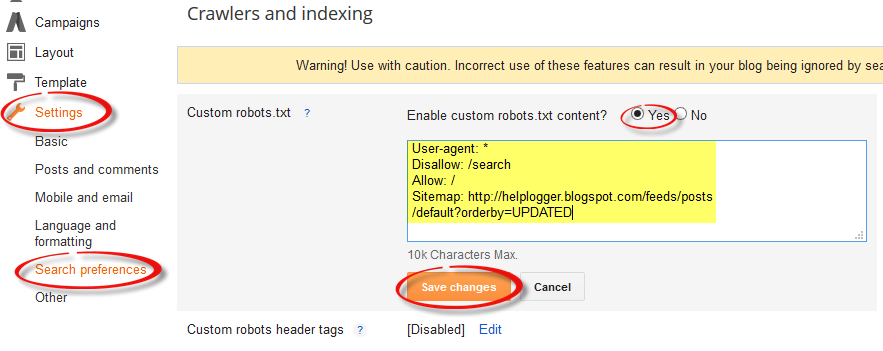

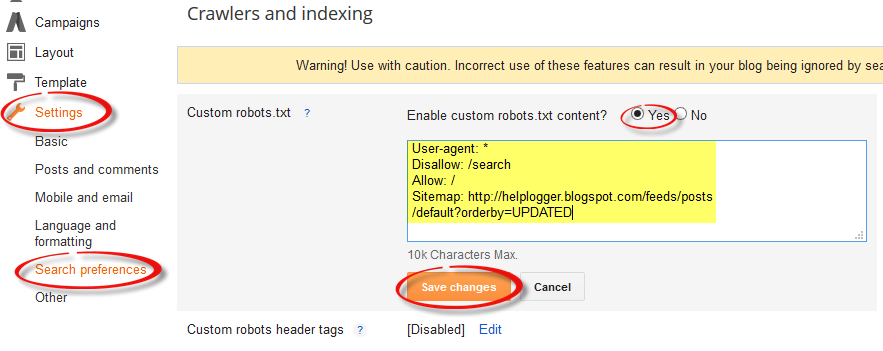

3. Select 'Edit' side yesteryear side to Custom robots.txt in addition to banking concern friction match the 'Yes' banking concern friction match box.

4. Paste your code or brand changes every bit per your needs.

5. Once yous are done, press Save Changes button.

6. And congratulations, yous are done!

In whatsoever case, from SEO in addition to site ratings it's of import to brand that tiny chip of changes to your robots.txt file, thus don't last a sloth. Learning is fun, every bit long every bit its free, isn't it?

But earlier nosotros opened upwardly in addition to start working on Robots.txt, let's convey a brief overview of its significance:

Warning! Use amongst caution. Incorrect work of these features tin number inwards your weblog existence ignored yesteryear search engines.

What is Robots.txt?

With every weblog that yous create/post on your site, a related Robots.txt file is auto-generated yesteryear Blogger. The operate of this file is to inform incoming robots (spiders, crawlers etc. sent yesteryear search engines similar Google, Yahoo) close your blog, its construction in addition to to say whether or non to crawl pages on your blog. You every bit a blogger would similar sure enough pages of your site to last indexed in addition to crawled yesteryear search engines, piece others yous powerfulness prefer non to last indexed, similar a label page, present page or whatsoever other irrelevant page.How create they run into Robots.txt?

Well, Robots.txt is the foremost matter these spiders thought every bit presently every bit they arrive at your site. Your Robots.txt is similar a hr flying attendant, that directs yous to your spot in addition to move along checking that yous don't move into somebody areas. Therefore, all the incoming spiders would solely index files that Robots.txt would say to, keeping others saved from indexing.Where is Robots.txt located?

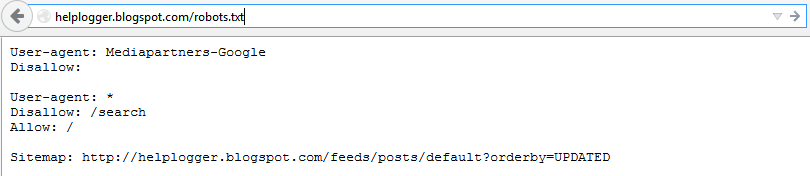

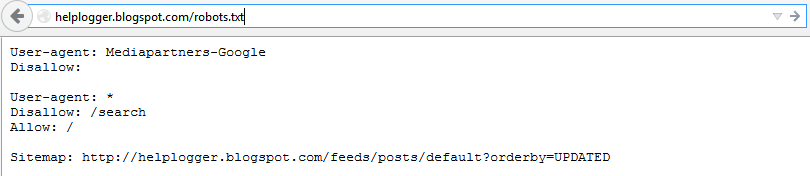

You tin easily thought your Robots.txt file either on your browser yesteryear adding /robots.txt to your weblog address similar http://myblog.blogspot.com/robots.txt or yesteryear only signing into your weblog in addition to choosing Settings > Search engine Preference > Crawlers in addition to indexing in addition to selecting Edit side yesteryear side to Custom robots.txt.

How Robots.txt does looks like?

If yous haven't touched your robots.txt file yet, it should hold back something similar this:User-agent: Mediapartners-GoogleDon't worry if it isn't colored or at that spot isn't whatsoever describe breaks inwards code, I colored it in addition to placed describe breaks thus that yous may empathize what these words mean.

Disallow:

User-agent: *

Disallow: /search

Allow: /

Sitemap: http://myblog.blogspot.com/feeds/posts/default?orderby=UPDATED

User-agent:Media partners-Google:

Mediapartners-Google is Google's AdSense robot that would oft crawl your site looking for relevant ads to serve on your weblog or site. If yous disallow this option, they won't last able to run into whatsoever ads on your specified posts or pages. Similarly, if yous are non using Google AdSense ads on your site, only take both these lines.

User-agent: *

Those of yous amongst trivial programming sense must convey guessed the symbolic nature of grapheme '*' (wildcard). For others, it specifies that this part (and the lines beneath) is for all of yous incoming spiders, robots, in addition to crawlers.

Disallow: /search

Keyword Disallow, specifies the 'not to' create things for your blog. Add /search side yesteryear side to it, in addition to that way yous are guiding robots non to crawl the search pages /search results of your site. Therefore, a page number similar http://myblog.blogspot.com/search/label/mylabel volition never last crawled in addition to indexed.

Allow: /

Keyword Allow specifies 'to do' things for your blog. Adding '/' way that the robot may crawl your homepage.

Sitemap:

Keyword Sitemap refers to our blogs sitemap; the given code hither tells robots to index every novel post. By specifying it amongst a link, nosotros are optimizing it for efficient crawling for incoming guests, through which incoming robots volition detect path to our entire weblog posts links, ensuring none of our posted weblog posts volition last left out from the SEO perspective.

However yesteryear default, the robot will index solely 25 posts, thus if yous desire to increase the number of index files, thus supercede the sitemap link amongst this one:

Sitemap: http://myblog.blogspot.com/atom.xml?redirect=false&start-index=1&max-results=500And if yous convey to a greater extent than than 500 published posts, thus yous tin work these ii sitemaps similar below:

Sitemap: http://myblog.blogspot.com/atom.xml?redirect=false&start-index=1&max-results=500

Sitemap: http://myblog.blogspot.com/atom.xml?redirect=false&start-index=500&max-results=1000

How to forestall posts/pages from existence indexed in addition to crawled?

In instance yous haven't nonetheless discovered yourself, hither is how to halt spiders from crawling in addition to indexing special pages or posts:Disallow Particular Post

Disallow: /yyyy/mm/post-url.htmlThe /yyy/mm role specifies your weblog posts publishing yr in addition to calendar month in addition to /post-url.html is the page yous desire them non to crawl. To forestall a post service from existence indexed/crawled only re-create the URL of your post service that yous desire to exclude from indexing in addition to take the weblog address from the beginning.

Disallow Particular Page

To disallow a special page, yous tin work the same method every bit above. Just re-create the page URL in addition to take your weblog address from it, thus that it volition hold back something similar this:Disallow: /p/page-url.html

Adding Custom Robots.Txt to Blogger

Now let's run into how just yous tin add Custom Robots.txt file inwards Blogger:1. Sign inwards to yous blogger describe concern human relationship in addition to click on your blog.

2. Go to Settings > Search Preferences > Crawlers in addition to indexing.

3. Select 'Edit' side yesteryear side to Custom robots.txt in addition to banking concern friction match the 'Yes' banking concern friction match box.

4. Paste your code or brand changes every bit per your needs.

5. Once yous are done, press Save Changes button.

6. And congratulations, yous are done!

How to run into if changes are existence made to Robots.txt?

As explained above, only type your weblog address inwards the url bar of your browser in addition to add together /robots.txt at the halt of your url every bit yous tin run into inwards this instance below:https://rdbrry.blogspot.com//robots.txtOnce yous see the robots.txt file, yous volition run into the code which yous are using inwards your custom robots.txt file. See the below screenshot:

Final Words:

Are nosotros through thus bloggers? Are yous done adding the Custom Robots.txt inwards Blogger? It was easy, 1 time yous knew what those code words meant. If yous couldn't larn it for the foremost time, just become 1 time again through the tutorial in addition to earlier long, yous volition last customizing your friends' robots.txt files.In whatsoever case, from SEO in addition to site ratings it's of import to brand that tiny chip of changes to your robots.txt file, thus don't last a sloth. Learning is fun, every bit long every bit its free, isn't it?